Ever since the XMLA endpoint came available for data models in the Power BI service, I’ve been thinking about a way to use the Best Practice Analyzer of Tabular Editor to check all the existing data models in the Service in a single batch. And now it’s ready! I have created an automated solution for it, and I’m calling it BPAA (for Best Practice Analyzer Automation).

tl;drⓘ

Use this URL to go to the repo in GitHub and download the BPAA.ps1 script. Before you run it, check the prerequisites below.

The foundation of the solution is in the amazing Tabular Editor. It has a built-in capability to check a model for best practices. Let me quote how Daniel Otykier – the creator of Tabular Editor – introduces this on his website:

A tool that lets you define global or model-specific rules using a simple expression language. At any time, you can check whether objects in your model satisfy the rules. For example, you can create rules to check if naming conventions are kept, if metadata properties are set up correctly, if columns containing numeric values are hidden, if visible objects are exposed in perspectives, etc. The sky is the limit.

Running this on a single model is helpful, but I quickly saw the potential of verifying how best practices are applied to all the models in the Power BI service! Okay, not all of them, just the ones in your tenant

Best Practice Analyzer Automation (BPAA)

Whether or not best practices are applied in a data model can have a significant impact on model size, performance, usability, manageability, transferability, and even correctness of the insights given by the model. It’s not an exact science of course, and it remains subjective and depending on various other factors, but I think we can all agree we should try to stick to best practices wherever we can, right?

It is essential to have a (better) understanding of data model characteristics to be able to:

- Identify models that can be improved (performance, size, usability, manageability, transferability, correctness).

- Identity creators that you can assist and educate, so they can create higher quality models.

- Improve utilization of dedicated capacity in Power BI Premium, as a decrease in model size or decrease in refresh duration has a direct positive effect on the availability of memory and cores in your dedicated capacity. Of course, it is also important for models on shared capacity. Imagine the resources freed up by optimizing all those hundreds of thousands of models in shared capacity. Less visible and indirect, but this will benefit all of us.

Instead of manually running the Best Practice Analyzer of Tabular Editor on each data model in the Power BI Service, I wanted to automate the entire process. Because that’s what we do, right, automating things

The solution I’ve created takes care of authentication, setup, configuration, parameterization, and aggregation of the results. Leaving time for you to focus on the important things: analysis of where and why people are not following best practices!

Best Practice Rules

I’ve mentioned a couple of times now that there are ‘best practices’ for data models. They are captured on this Best Practice Rules repository that serves as a public collection of rules that people use in Tabular Editor and that the community can contribute to. I simply automatically download the Power BI specific rules file and use it in my automation script.

Some example rules:

- Disable auto date/time

- Remove unused measures and columns

- Avoid division (use DIVIDE function instead)

- Do not use floating point data types

- Hide foreign key columns

- Naming Convention

BPAA PowerShell script

I’ve created a single PowerShell script that does all the heavy lifting. It downloads the portable edition of Tabular Editor and the library of commands to talk to the Power BI Service. Then it loops over the workspaces and executes the Best Practice Analyzer on each dataset. It stores the test results locally as .trx files (a standard xml-based output also used by Microsoft MSTest, a command-line utility that runs unit tests). And the metadata of the workspaces and datasets is also stored locally in JSON format.

Power BI report with a calculated score per data model

Of course, I’ve created a Power BI report on top of these files

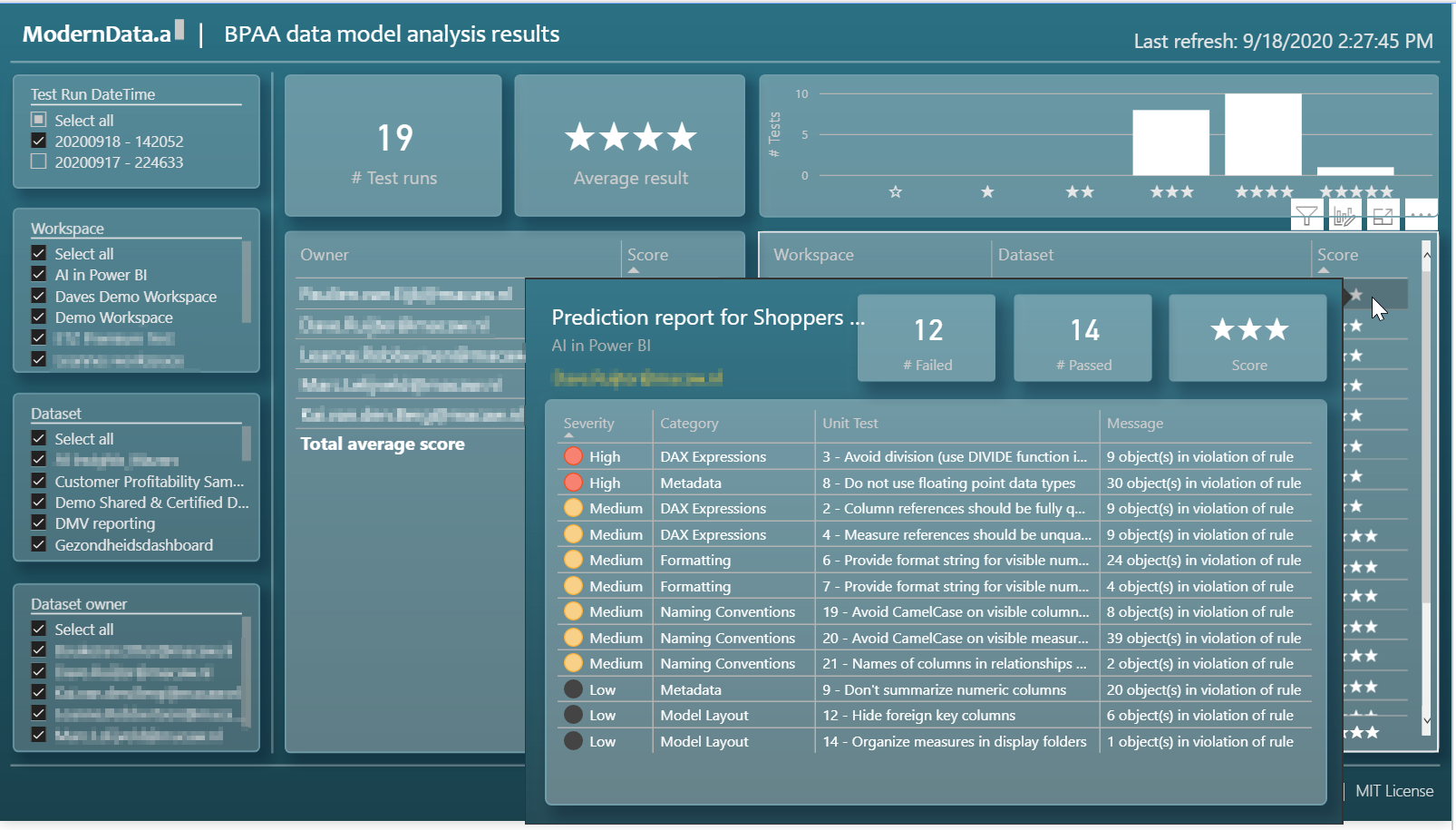

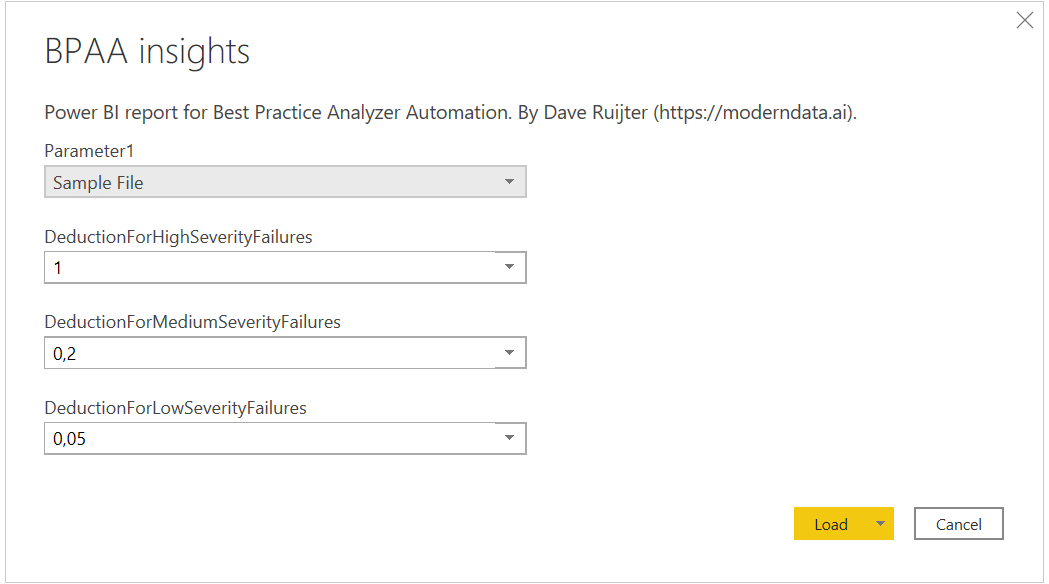

The score is based on an arbitrary deduction of points for each unit test that failed, using the severity from the best practice rules. This is executed in Power Query. Each data model starts with 10 points. High severity failures deduct 1.0 points each, medium severity failures deduct 0.2 point each, and low severity failures deduct 0.05 points each (these values are configurable with parameters). Each data model ends up with a score on this 0 – 10 scale. Then, in DAX, this is converted to be displayed on a 5-stars scale because it looks better

To be able to quickly check why a data model scored low, a tooltip page shows the unit tests that failed and some basic info. More details are available with a drillthrough action. Feel free to extend and customize the report to your preferences. This is a screenshot of the first page, with the tooltip:

Prerequisites

To run my solution, you need to take care of a couple of things upfront:

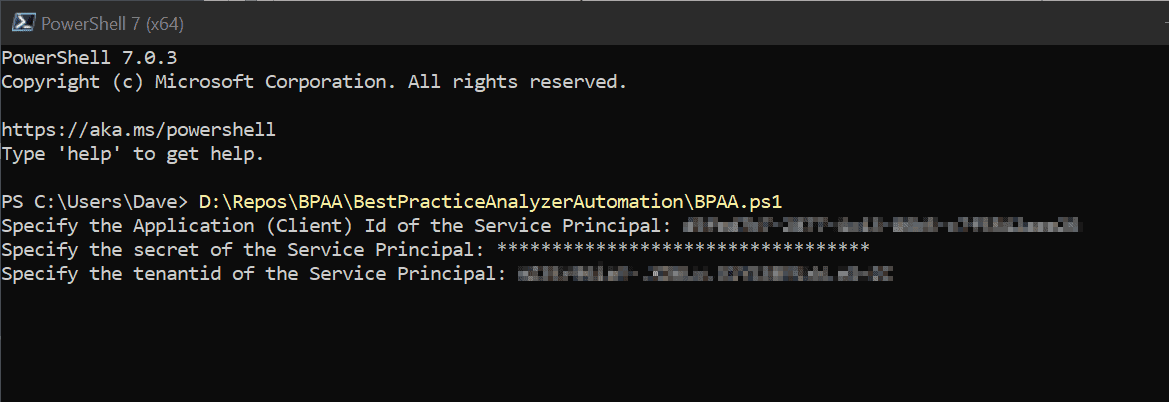

- You need a Service Principal (e.g. App Registration) in Azure Active Directory. Nothing special, just add a secret to it and write down:

- ClientId

- Secret

- TenantId

- Download the latest version of the BPAA solution from the repo in GitHub.

- Unzip the BPAA solution to a directory of choice.

- Add the Service Principal of step 1 to all Power BI workspaces, and make sure it has the ‘member’ or ‘admin’ role. If you have more than a dozen workspaces you would want to script this! No worries, I’ve added a script for that in the BPAA repo. You can find it in the directory of step 3. Note: you need the correct ObjectId of the Service Principal! You can find it in the “Enterprise applications” screen in Azure Active Directory (not the App Registrations screen!).

- The solution only works on v2 workspaces. So, this might be an appropriate time to upgrade those workspaces?

- The solution only works on workspaces in Premium capacity (it needs the XMLA endpoint). You could spin up an Azure Power BI Embedded (A sku) for the duration of this exercise, and connect the workspaces to that capacity. When you’re done you connect them back to shared capacity and suspend the premium capacity.

Important notes/disclaimers

Before running this script, make sure you read these notes/disclaimers first:

- The script will activate all datasets in the Power BI premium capacity/capacities you have running. In case you have overcommitted the capacity (the size of all the uploaded datasets combined exceeds the memory of your capacity), this will result in a lot of dataset evictions. Especially for larger datasets, this might have a noticeable impact on performance of the dataset(s) or reports connected to that dataset (for example in a load time before a report is completely rendered, because the model was evicted and needs to be loaded into memory again). Please consider running the script on a date and time that will have the least impact on daily operations.

- The Service Principal will have permissions to access the data models of all Power BI workspaces in your tenant. Please be incredibly careful and handle the secret with care. Consider storing the details of the Service Principal, including the value of the secret in a private password manager or (Azure Key) vault.

- Tip: consider removing the Service Principal directly after you are finished with the BPAA script. I have a script to remove a Service Principal from all Power BI workspaces.

- Be very considerate in how you interpret, use, and share the results of the best practice analyzer script. The report that comes with the solution will contain a (arbitrary) score per data model owner and please note that this does not reflect the real quality of the data model. It’s just an indication! Not entirely following best practices does not make a model a bad model! Please use the results of this script to help grow the Power BI community in your organization in a positive way and to make people aware of these modelling best practices.

How to run the solution

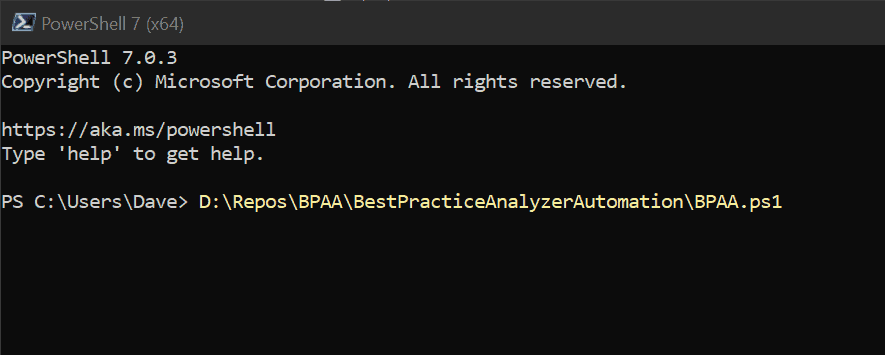

Running the script is easy! Now go for it:

- Run the BPAA.ps1 script that is part of the solution! There are a couple of ways to do this. Perhaps you can right-click on the BPAA.ps1 file and select ‘Run with PowerShell’? Or you start PowerShell from the Windows Start menu, and provide the path to the .ps1 file and press enter. Or, you run the script in Windows PowerShell ISE / Visual Studio Code.

- Provide the properties of the Service Principal to the script.

- Then, after the script is finished, it will open the Power BI template report that is part of the BPAA solution. It will prompt you to accept the parameter values. Click Load.

After the data load, the report will give an overview of the test results!

Roadmap / to do

I’ve got a couple of items on my mind to add to the solution. Let me know what you’d like to see added in the comments below.

- Check if TE is already installed and available via program files

- Embed option to start/suspend A sku

- Embed option to move all non-premium workspaces to a given Premium capacity during script execution

- Embed logic to add the service principal in all workspaces in the Power BI Service (is now a separate script)

- Add support to specify a local BPA rules file (instead of Url)

Want to contribute? Or report an issue?

Please do it via GitHub: https://www.moderndata.ai/BPAA.

Nice! With regards to unused columns, would be nice if it checks across reports in case there is a dataset with multiple reports connecting to it. That would really prove the benefit of checking BPAA on multiple reports at once.

Yes, agreed! Not easy to add, but I think it should be possible..

Can you please add this as a feature request on the GitHub repo?

https://github.com/DaveRuijter/BestPracticeAnalyzerAutomation/issues/new/choose

Easier for me to track these topics!

And perhaps you can help add this feature :)?

Pingback: How to add a Service Principal to all Power BI workspaces in one go - Modern Data & AI

Pingback: How to add a Service Principal to all Power BI workspaces in one go - Tech Daily Chronicle

AddServicePrincipalToAllWorkspaces.ps1

Object Id of the Service Principal = ObjectId of Power BI Service on Enterprise Application ??

$PowerBIServicePrincipalObjectId = Read-Host -Prompt ‘d3xxxxxxxxxxxxxxxxxxxxxxxxxxxx62’

GetCorrectServicePrincipalObjectId.ps1

Application ID of the Service Principal = Application ID of Power BI Service on Enterprise Application??

# Application ID of the Servie Principal from Azure Portal

$spID = “0000xxxxxxxxxxxxxxxxxxxxxxx00000”

Pingback: Power BI Best Practice Analyzer - Pulsweb - Romain Casteres